Table of Contents

Varnish Cluster

Introduction

I recently set up a cluster of Varnish servers which is a caching reverse proxy generally used to speed up websites. It features its own configuration language, VCL, which can be used to customize the behavior of Varnish in almost any way.

Performance

I currently have 3 Varnish servers configured on AWS EC2 instances with an elastic load balancer DigitalOcean with a network load balancer in place so each server gets an equal share of the traffic. I ran stress tests against the infrastructure and was very happy with the results; I couldn't get an exact measurement since my own computer was the bottleneck in the tests.

Reliability

The primary reason for the load balancer is for quick failover in the event of a server failure. A failure should be detected within 10 seconds which will result in the failed server no longer receiving traffic. I am using multiple availability zones with AWS which should mitigate problems caused by localized datacenter faults.

I stopped using AWS due to reliability issues and high bandwidth fees. I did failure tests on both AWS and DigitalOcean and confirmed the load balancer would rapidly stop sending traffic to a faulty server. I have configured the health check to verify connectivity to upstream servers via the VPN, meaning that most failure states should cause the load balancer to stop sending traffic to the faulty server.

Purpose

I am slowly restructuring my entire online infrastructure. Since I run various services which all have different needs, I generally have unused resources. For example, I have some applications which use a lot of CPU and almost no RAM, whereas other applications use a lot of RAM and little CPU.

My plan is to consolidate some of my infrastructure allowing for more effective use of resources. Making this Varnish cluster was step one of that consolidation. Since many of my websites do include a caching layer and a load balancer layer, putting all of my online services behind a single Varnish cluster allows me to have those resources available without duplicating them for each service.

Since I now only have to manage a single cluster, I can put more time and resources into this single setup than I would if I had multiple separate Varnish setups.

VCL features

Since I can customize the behavior of Varnish using Varnish Configuration Language (VCL), I am able to implement things which further enhance the entire setup. For now I have decided to keep my custom VCL private.

Rate Limiting

A really nice feature of VCL is rate-limiting provided by the vsthrottle module. This allows me to configure rate limits with an extreme level of customization. For example, I run IoTPlotter which accepts API requests from IoT devices, but sometimes rate limiting needs to be applied when devices are behaving in an unacceptable way.

I have implemented a rate limiting system which counts the number of error status codes received from the backend, and then limits that API key until the errors stop or reduce. This is because I have seen some devices malfunction and continuously send garbage data which the application server rejects, but the IoT device continues to keep retrying.

By blocking this kind of traffic at the Varnish layer, a lot of resources can be freed up at the application server layer which saves resources (and therefore also saves money).

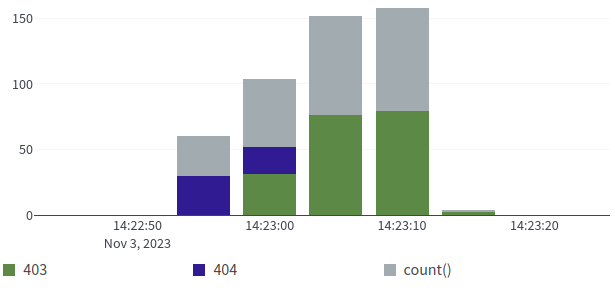

I also implemented this rate limit system to the entire Varnish setup (eg, all of my websites) but with some tweaks to block malicious web crawlers and automated scans. The following chart shows status codes returned by a malicious web crawler where initially it was receiving 404's from the backend, then the traffic was mitigated causing it to always receive 403's.

Although this particular bot was crawling fast enough to trigger the standard rate limit too, this system blocks malicious crawlers that are also crawling at a very slow rate if they keep causing bad server responses (pages which don't exist, or pages which aren't allowed to be accessed, etc).

Access Control

Varnish can also be used to remove access to certain parts of websites. For example, this website is powered by DokuWiki but there is no good built-in way to restrict the login page to just me. Since I am the only person who ever logs in, I have configured Varnish to block access to the login endpoints. I am still able to access the login page by using a separate administration VPN connection.

Although the login page being accessible isn't a security vulnerability, it is only a single line of defense against attack. Implementing this system means there is an extra hurdle for attackers to get over and lowers the overall attack surface.

Backend Server Rules

Also within VCL, I am able to configure which requests go to which backend application server using very powerful configuration. Generally I don't do anything too complicated here, but I am able to choose different backends for different URLs without resorting to different subdomains.

I am also able to set up load balancing which can be as complicated or as simple as I like. This means I can add load balancing to all/any of my sites whenever I want without having to pay for additional load balancers at AWS/DigitalOcean etc.

I can also setup dynamic rate limits based on the backend used or overall backend health.

Maintenance

Since I have created a high-availability cluster, it is easy to perform maintenance without closing or losing any connections. I have an Ansible playbook which runs against each server to do updates and other changes. This will run on each server, one by one, and during the maintenance takes the affected server out of the healthy pool meaning it receives no traffic.

Only when one server has finished successfully will the next server start to perform maintenance.