Table of Contents

Simple URL Shortener

Introduction

I decided I wanted my own branded URL shortener, so I purchased the domain joeh.to for this purpose. Instead of using one of the many open-source URL shortening packages available, I wrote some small configurations and scripts for Apache and Varnish. This saves me from having to install bloated software with features I will never use.

Apache Config

I decided I did not want any server side scripts such as PHP to run, for that reason I kept my logic only within Apache. I used the following configuration in my Apache VirtualHost file:

ServerName joeh.to

ServerAlias www.joeh.to

RewriteEngine On

RewriteMap redirects dbm=db:/etc/apache2/user_conf/redirects.db

RewriteCond ${redirects:$1} !=""

RewriteRule ^(.*)$ ${redirects:$1} [redirect=permanent,last]

ErrorDocument 404 "<h1>Not found</h1>Check for typos and try again."

DocumentRoot /var/www/html

ErrorLog ${APACHE_LOG_DIR}/error.log

CustomLog ${APACHE_LOG_DIR}/access.log combined

This essentially configures Apache to look in the file /etc/apache2/user_conf/redirects.db for the list of redirects to perform. This file is an Apache DBM file (based on Berkeley DB) which can be generated using the following command:

httxt2dbm -f db -i redirects.txt -o /etc/apache2/user_conf/redirects.db

The contents of redirect.txt need to follow this structure:

/ https://jhewitt.net/ /test https://www.youtube.com/watch?v=dQw4w9WgXcQ /example https://example.com

Where the first part is the URL as shown in the browser (without the host/domain) and the second part is the URL to redirect to.

No additional commands are needed after httxt2dbm, the database change will immediately take effect.

In my experience, using DBM is generally very fast. I expect this method of redirection will easily handle thousands of URLs without slowing down. You might want to do more research before implementing on a system which needs to redirect >100k URLs though.

Varnish Config

For those unfamiliar, Varnish is a reverse proxy primarily designed for caching HTTP content. It features its own configuration language, VCL.

I didn't want to rent yet another server for hosting my Apache redirect server, nor did I want to add it to an existing server. I decided to host it on my home server, but since that hardware isn't “production reliable” I wrote some Varnish VCL to cache the redirects from my varnish server cluster.

I used the following config:

if (bereq.http.host ~ "joeh.to") {

if (beresp.status == 301) {

set beresp.ttl = 1h;

set beresp.grace = 4w;

} else {

set beresp.ttl = 30s;

set beresp.grace = 1d;

}

}

This instructs Varnish to cache 301's (the permanent redirects which my URL shortener is configured to send out) with a TTL of 1 hour and a grace period of 4 weeks. This means that any further requests for the same URL within an hour will not reach my Apache server, it will be served by the Varnish cache, and the cached object will be kept for 4 weeks.

If another request comes in after an hour but before the 4 weeks is reached, the request will be handled by the Varnish cache but Varnish will also send a request to Apache in the background to refresh the cache. If the Apache server is unavailable, the old cache is still used and there is no interruption to service.

This means that as long as a URL is accessed at least once, it will remain working for 4 weeks even if my home server is having issues.

Since a popular feature of URL shorteners is statistics, Varnish logs can be sent to something which can visualize logs such as GrayLog if required.

The Varnish↔Apache communication happens over a VPN so I do not need to directly expose any part of my home server to the internet. Some basic security rules are also implemented within Varnish VCL to block certain requests, along with security hardening on both servers.

Updating the URL list

I do not want to SSH into the Apache server every time I want to add a new shortened URL so I made a quick and dirty Python script to pull the latest redirects.txt from GitHub and recompile the database.

This script has 3 parts. First, it checks for the webhook URL being accessed within the Apache logs; this is a particularly dirty part of the script but it allows me to avoid installing PHP or anything else which could be potentially vulnerable into the web server. The webhook URL is called by GitHub every time there is a new commit.

Next the repo is pulled and some basic checks are performed on the redirects.txt file (just checking every line contains exactly 2 elements)

Finally it will call the httxt2dbm command to rebuild the database.

This script needs to be run each minute on a cronjob. It can also be reconfigured to run less often, but this allows for quick responses to any changes made in Git.

Start by cloning your repo on the server:

git clone [email protected]:Your_user/Your_repo.git

Mine is cloned into a directory called Short_URLs

The script looks like this:

import subprocess

import requests

import datetime

import re

repattern = r'\[(.*?)\]'

#Path to the redirects.txt file, pulled from Git.

TXT_PATH = "Short_URLs/redirects.txt"

#The path which Apache is configured to use for the redirects DB.

DB_PATH = "/etc/apache2/user_conf/redirects.db"

#The Apache access logs file to check for recent webhook calls.

ACCESS_LOG_PATH = "/var/log/apache2/access.log"

#The Git directory where redirects.txt will be pulled into.

GIT_DIR = "Short_URLs/"

#How often this script is run, in seconds.

SEARCH_TIME = 60

can_continue = False

with open(ACCESS_LOG_PATH, 'r') as logreader:

for line in logreader.readlines():

line = line.strip()

if "/webhook-url-here" in line:

matches = re.findall(repattern, line)

if len(matches) > 0:

dt = datetime.datetime.strptime(matches[0], "%d/%b/%Y:%H:%M:%S %z")

dt = dt.replace(tzinfo=None)

dt_delta = (datetime.datetime.now() - dt).total_seconds()

if dt_delta <= SEARCH_TIME:

can_continue = True

print("tdelta is", dt_delta)

break

if not can_continue:

print("Webhook not triggered")

exit()

cmd = subprocess.run(["git", "-C", GIT_DIR, "pull"])

linecounter = 0

with open(TXT_PATH, 'r') as filereader:

for line in filereader.readlines():

linecounter += 1

if len(line.strip()) > 0 and len(line.strip()) < 3:

print("Bad line", linecounter)

exit()

if line.count(' ') != 1:

print("Bad line", linecounter)

exit()

cmd = subprocess.run(["httxt2dbm", "-f", "db", "-i", TXT_PATH, "-o", DB_PATH])

print("Updated")

There are definitely nicer ways to do this. Another (possibly better) option to explore is something like this but my way gives me a little bit more flexibility with my Git setup. For example, if I really wanted to I could use the GitHub web editor to add URLs to my shortener.

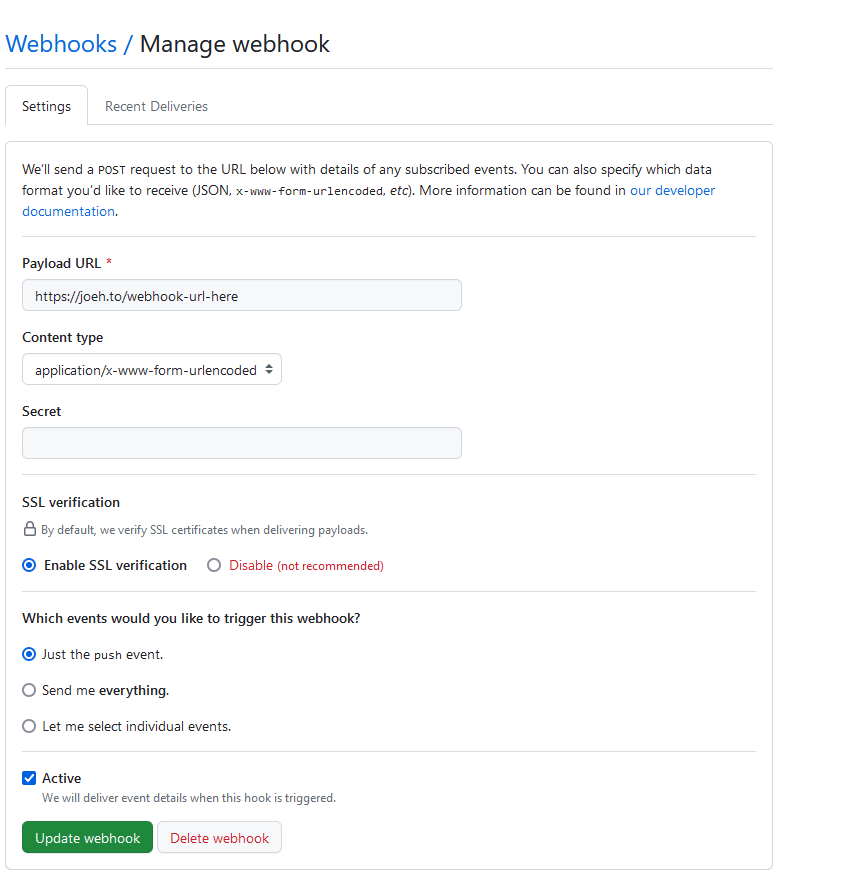

The webhook on the GitHub side should look something like this:

Since this webhook is not being handled by anything, we cannot make use of the secret. This is a security flaw in this design, however the worst case if it is exploited will be my server pulling from GitHub every minute. I decided to take that risk. I use a randomly generated URL which GitHub sends the webhook request to, which is “good enough”. In the future I will likely add a rule to my Varnish configuration to block requests to the webhook URL that do not originate from GitHub.

API / Automation

Since all URLs are just stored in Git, it's trivial to write a script or API to edit this file and push to Git. I will likely make a simple Bash command which takes 2 URLs (the short part and the destination) and pushes that to Git at the end of the file.

It also won't be hard to make a script which generates a random string, checks it doesn't already exist in the file, and adds it to your file with the required destination URL.

Summary

This definitely isn't the most elegant solution however I really like the fact that it doesn't require a bunch of dependencies which may not be maintained, nor does it require thousands of lines of code which may or may not introduce vulnerabilities.

The management of the URL list can be handled on another device manually, or a web interface can be hosted elsewhere which handles new entries being added to the file.

This is also a super fast service, where the only slowdown is the latency between the user and the Varnish server. Further speedups can be gained by using features offered by Cloudflare which are currently not being used.